Welcome to the Konkle Lab!

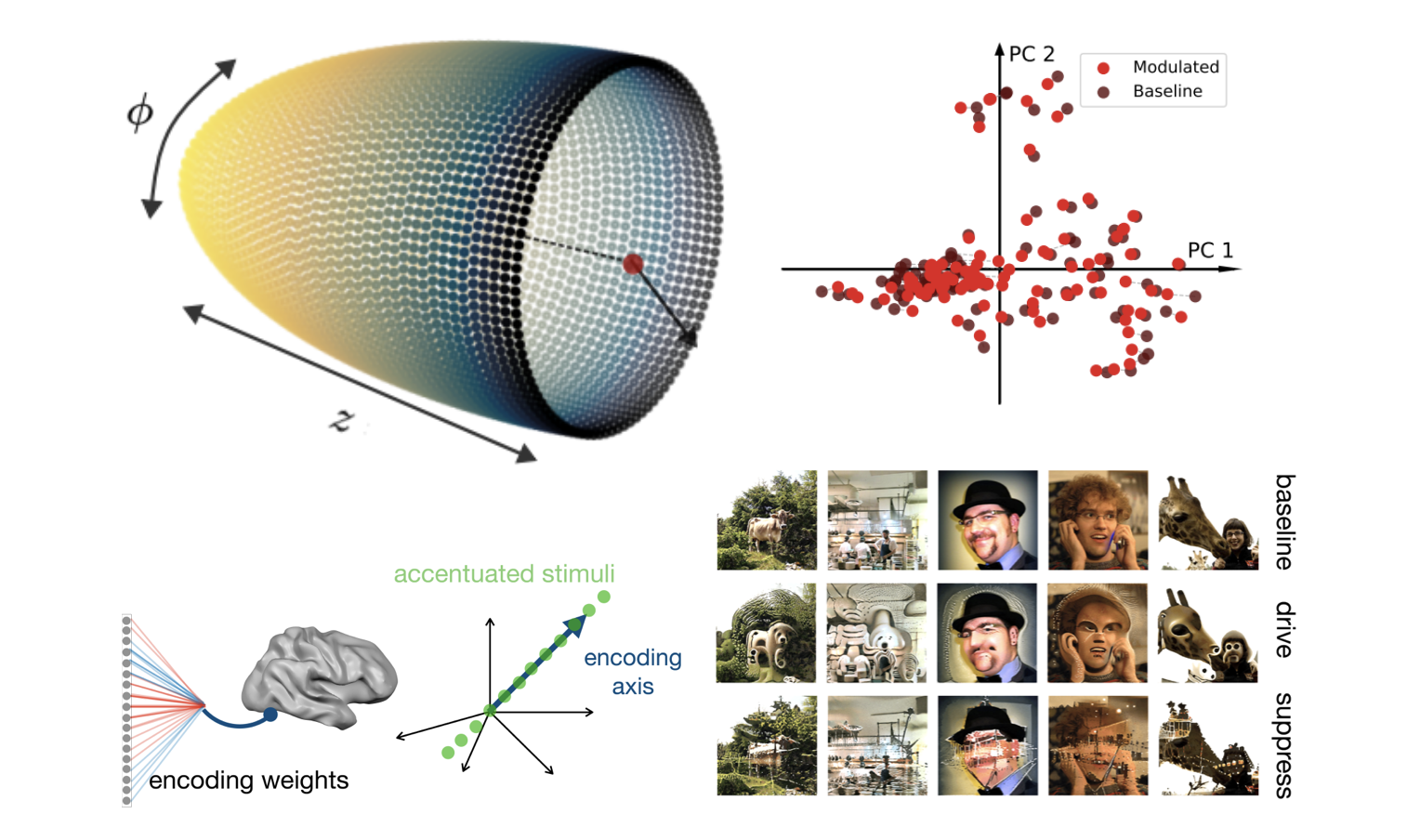

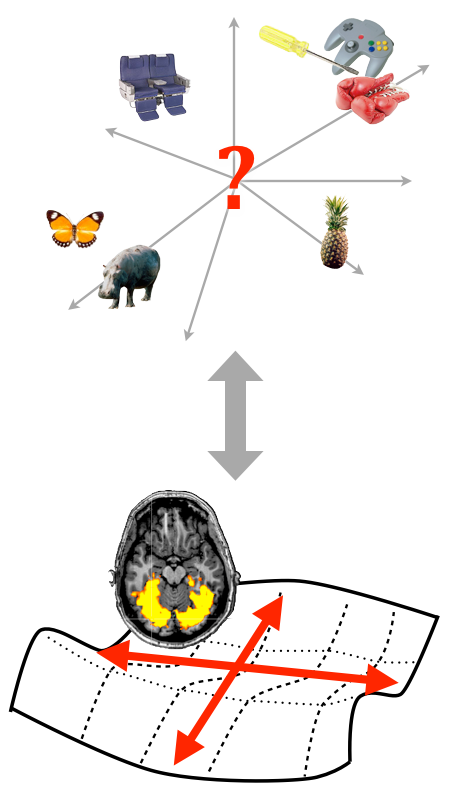

Our broad aim is to understand how we see and represent the world around us. How is the human visual system organized, and what pressures guide this organization? How does vision interface with action demands, so we can interact in the world, and with conceptual representation, so we can learn more about the world by looking?

Our approach starts from the premise that the connections of the brain are driven by powerful biological constraints—as such, where different kinds of information is found in the brain is not arbitrary, and serves as a clue into the underlying representational goals of the system. Our research approach is inspired by considering the experience and needs of an active observer in the world—this thinking continually deepens our understanding of how behavioral capacities are expectant in the local and long-range architecture of the brain, and how neural networks absorb the statistics of visual experience and the consequences of actions, to realize the functions latent in the structure.

The techniques we use include both empirical and computational methods. We use functional neuroimaging and electroencephalography to measure the human brain. We develop computational models to link network architecture with cortical topography. We use behavioral methods to measure human perceptual and cognitive capacities. And, we draw on machine vision and deep learning approaches to gain empirical traction into the formats of hierarchical visual representation that can support different visual behaviors.

contact:

talia_konkle@harvard.edu | CV | google scholar | @talia_konkle

William James Hall 780

33 Kirkland St

Cambridge, MA

(617) 495-3886

Our broad aim is to understand how we see and represent the world around us. How is the human visual system organized, and what pressures guide this organization? How does vision interface with action demands, so we can interact in the world, and with conceptual representation, so we can learn more about the world by looking?

Our approach starts from the premise that the connections of the brain are driven by powerful biological constraints—as such, where different kinds of information is found in the brain is not arbitrary, and serves as a clue into the underlying representational goals of the system. Our research approach is inspired by considering the experience and needs of an active observer in the world—this thinking continually deepens our understanding of how behavioral capacities are expectant in the local and long-range architecture of the brain, and how neural networks absorb the statistics of visual experience and the consequences of actions, to realize the functions latent in the structure.

The techniques we use include both empirical and computational methods. We use functional neuroimaging and electroencephalography to measure the human brain. We develop computational models to link network architecture with cortical topography. We use behavioral methods to measure human perceptual and cognitive capacities. And, we draw on machine vision and deep learning approaches to gain empirical traction into the formats of hierarchical visual representation that can support different visual behaviors.

contact:

talia_konkle@harvard.edu | CV | google scholar | @talia_konkle

William James Hall 780

33 Kirkland St

Cambridge, MA

(617) 495-3886